7 min to read

Meltdown - How and Why

Let us start by exploring the key concepts required:

- Out of Order/ Speculative execution

- Virtual Addresses and memory mapping

- Memory Protection

Out of Order Execution

Whenever we run a program, we expect that the CPU executes the instructions line by line. Indeed, the entire field of computer software relies on this simplifying assumption of in-order execution by the CPU. From the perspective of the CPU though, this would result in an incredible waste of power and computation.

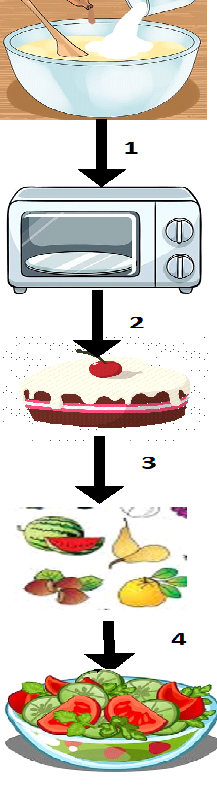

For example, let us say we want to bake a cake and prepare a salad. If we were to follow in-order execution, we would first have to wait for the cake to be baked, before we could even start collecting ingredients for the salad !

From the figure, we can see that there are 4 steps needed to make the cake and salad.

- Prepare the cake batter

- Place the cake for baking

- Wait (upto an hour !!) for the cake to finish baking

- Prepare the salad

Isn’t it a gross waste of time to sit twiddling our thumbs until the cake is baked ?

Wouldn’t we be able to make the salad while waiting for the cake to get baked ?

Of course we would ! The key idea is that the salad does not depend on the cake in any manner whatsoever.

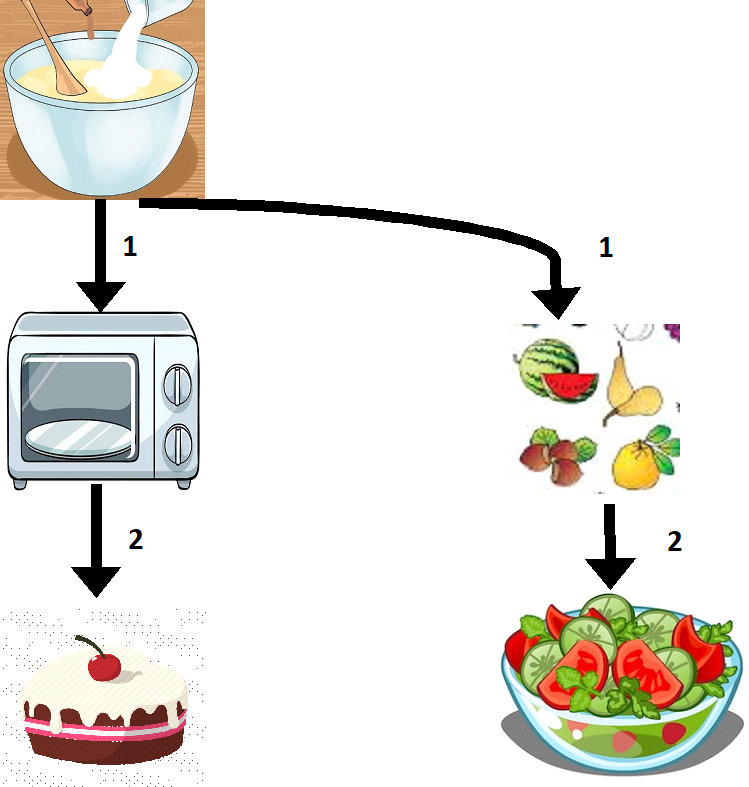

Let us now re-attempt the same excercise, but with Out of Order execution.

From the figure, it becomes apparent that we are saving two steps by preparing the salad alongside baking the cake.

Coming back to the world of CPUs, let us replace the baking by a memory load, and the preparation of salad as an ALU operation. Hence, the CPU begins executing arithmetic operations, even as it waits for some unrelated data to be loaded from the memory. Out of order execution has become a standard feature on all modern CPUs to maximize performance.

Virtual Addresses and Memory Mapping (Linux)

Let us now look at how memory is mapped by the Linux Kernel.

Any program in execution is called a process.

For example, instances of Firefox, Notepad, File Explorer, etc. are all processes.

Each process has its own set of memory assigned by the kernel. For obvious reasons, one process should not be able to figure out

the location/content of memory belonging to another process.

To enforce this, we have “Virtual Address Space” for each process. The kernel

assigns each process its own exclusive address space.

Virtual Addresses (Paging) serves several purposes, such as simplifying I/O, memory accesses, etc. which are beyond the scope of this post.

The component of memory management that concerns us is the virtual address space (VAS).

The virtual address space of a process is the set of virtual memory addresses assigned by the kernel.

The address space for each process is private and cannot be accessed by other processes.

Importantly, the process is only allowed to use a portion of its VAS. This is because the entire Kernel memory is mapped by the kernel in the remaining VAS to reduce access time to other resources (disk I/O, system call APIs, etc.).

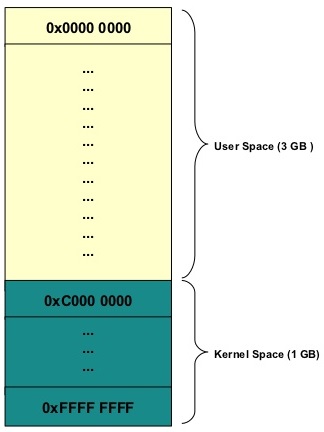

A typical VAS mapping is given in the figure below:

The process is allowed to use addresses in the range 0-3 GB. The kernel address space is mapped in the remainder of the memory.

You can think of this as getting your own cubicle, which separated from your colleague’s cubicle, but your boss can access all of the cubicles. You and your colleagues are the user space processes with your own virtual memory mappings to the company data, while your employer is the all encompassing Kernel who can see everything.

The kernel ensures that the process is unable to access any of the protected (kernel space) addresses.

But how does the kernel do this ?

This brings us to the next part - Memory Protection Mechanisms.

Memory Protection

As we’ve already seen, the Kernel’s entire address space (and consequently the entire system memory) is dutifully mapped out in each process’ VAS, and we need to ensure that the process can’t access anything other than the allowed addresses.

The protected addresses marked PROTECTED by the Kernel. Attempting to access them leads to an interrupt generated by the CPU (commonly called a page fault).

Anyone who has played around with C has without doubt come across the infamous segmentation fault : core dumped error. This occurs when an attempt is made to access pages marked as PROTECTED by the kernel. The permission check fails in the CPU and a SIGSEGV interrupt is generated. The kernel intercepts this interrupt and generates an exception for the user-space application attempting read/write to the location.

Putting it all Together

We have seen that an interrupt is generated by the CPU for an illegal memory access. However, the interrrupt is not immediately generated when it occurs in out of order execution, since the exception caused by the protection check is buffered before being output (for details, see Speculative Execution). After the out of order execution completes, the CPU outputs the buffered result (generates an interrupt), and as before, the kernel generates an exception.

Meltdown leaks the data of protected locations by abusing out of order execution. If the result of a load from protected memory is temporarily brought into the CPU cache during the buffering period, it is possible to determine the value at that location, and hence “melt down” the kernel’s memory protection.

For example let us consider a trivial program

//STEP 1: Repeatedly perform expensive load, store, and ALU operations

for(i=0; i<1000; i++)

{

int junk = buff[0]; // Load data

_mm_clflush(buff[0]); // Flush from cache

buff[0] = (int)pow(junk, 10); // Expensive ALU exponentiation, followed by store operation

}

//STEP 2: Access the data at protected location

int *secretPtr = 0xffff; // Protected Address

int secret = *secretPtr; // Load from protected address

PART 1 involves repeatedly performing expensive Loads, stores, and ALU operations. This encourages the CPU to proceed to PART 2 in out of order execution when it is waiting for the load/store to complete, or while performing the expensive ALU operation.

PART 2 involves accessing a protected address, which in turn generates a page fault speculatively and stores it in the buffer.

The response to this speculative page fault differentiates CPUs vulnerable to Meltdown and those resistant to it.

Vulnerable CPUs load the value from the secret data into the cache.

Resistant CPUs, on the other hand, either load garbage or zero into the cache.

Once the data is loaded into the cache, and before the out of order execution can commit, it is trivial to derive the secret value using a side channel timing attack.

Conclusion

That brings us to the end of this post. I hope I have been able to give a clear understanding of

the high level mechanics of meltdown.

I have skimmed over the actual implementation of meltdown to keep this beginner-friendly.I plan to cover these in a later post.

I have also skimmed over the specifics of out of order execution and speculation. Relevant links are in the references.

Comments